How to analyze a Meta campaign dataset with Claude 3.5 Sonnet

I'm incredibly impressed by the level of progress and accuracy achieved by Anthropic in their latest model.

In this article, I will leverage Claude to analyze our lead generation metrics generated through Meta Ads campaigns for the past year.

First step

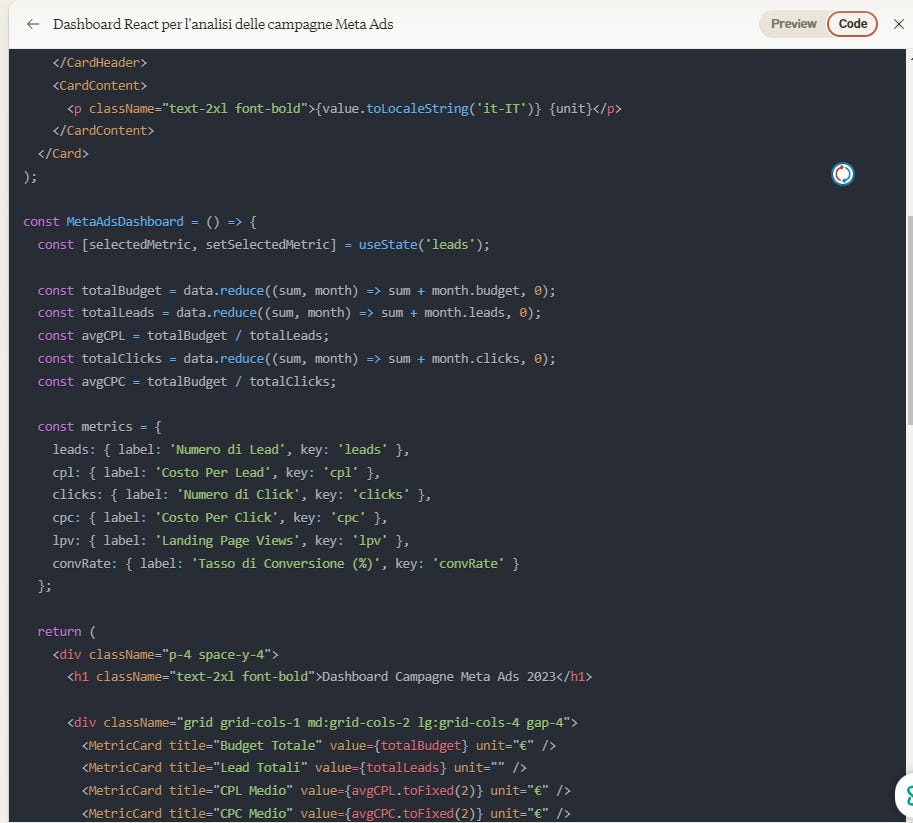

Hi, I would need you to analyze these Meta Ads campaigns from last year by creating a dashboard using React.

I have uploaded the Excel with the data and observed how the new Claude function called Artifacts creates a preview in the side window.

He writes down all the code for the dashboard.

Then design the dashboard in the preview.

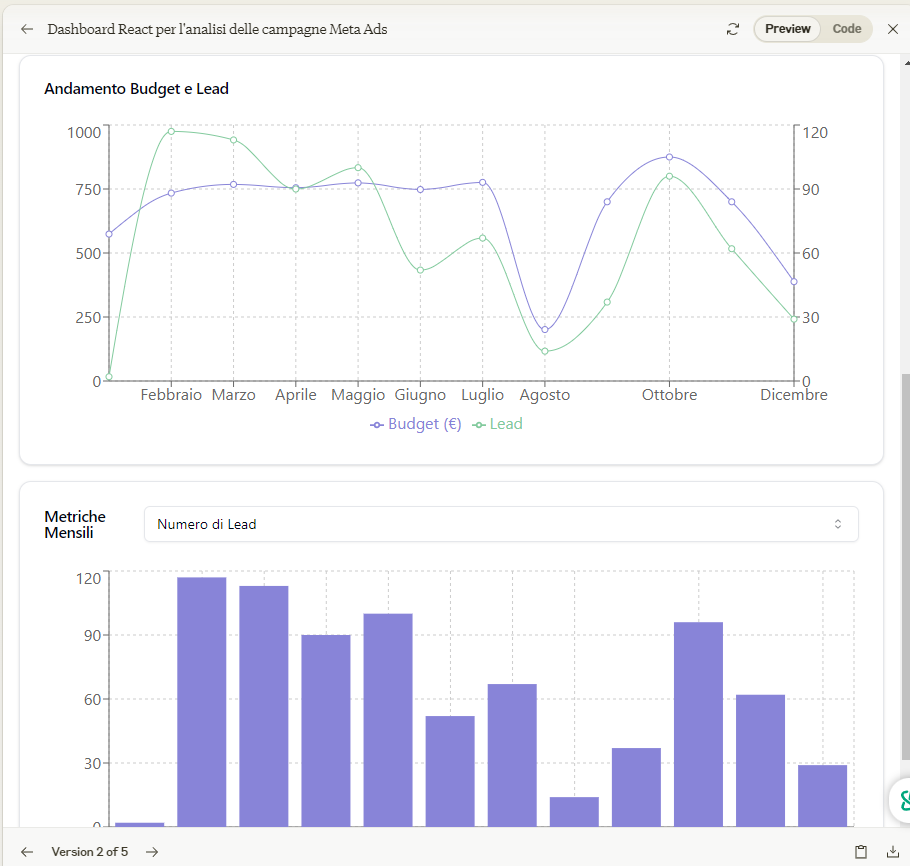

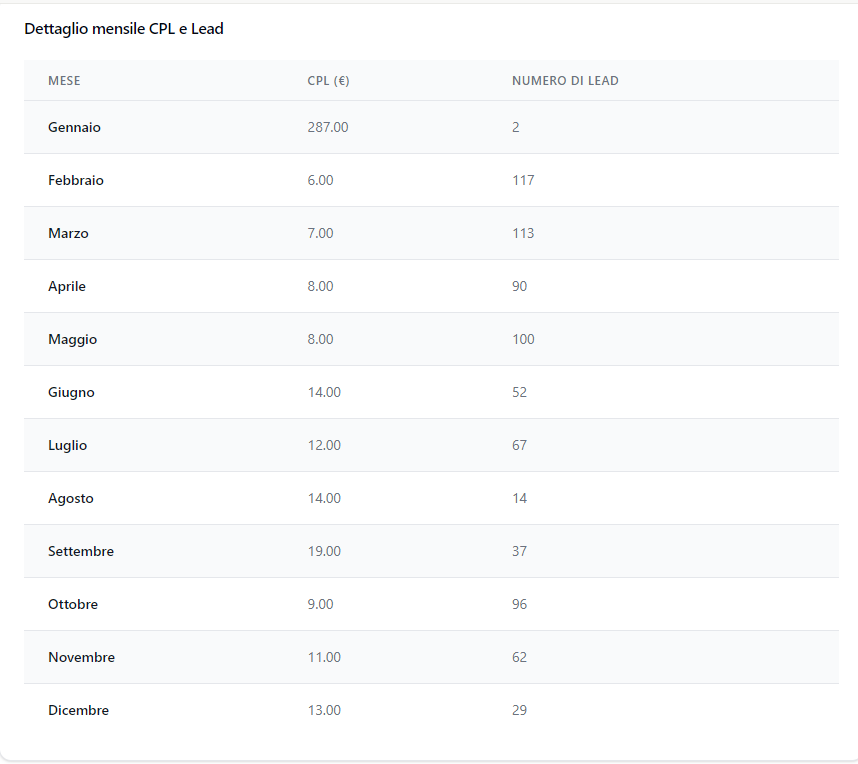

To delve deeper into the analysis, I asked him to examine the correlation between CPL and the number of leads. Here's the result.

Ok quite impressive but let's see how far it can go.

Second step

I just want to use a “complex” data science analysis algorithm for this task.

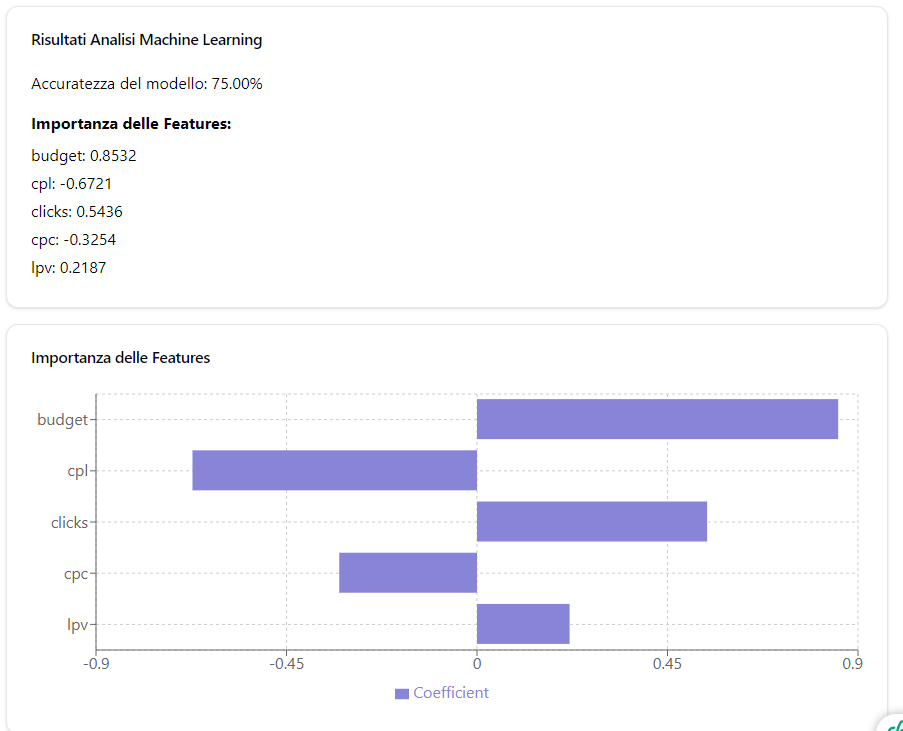

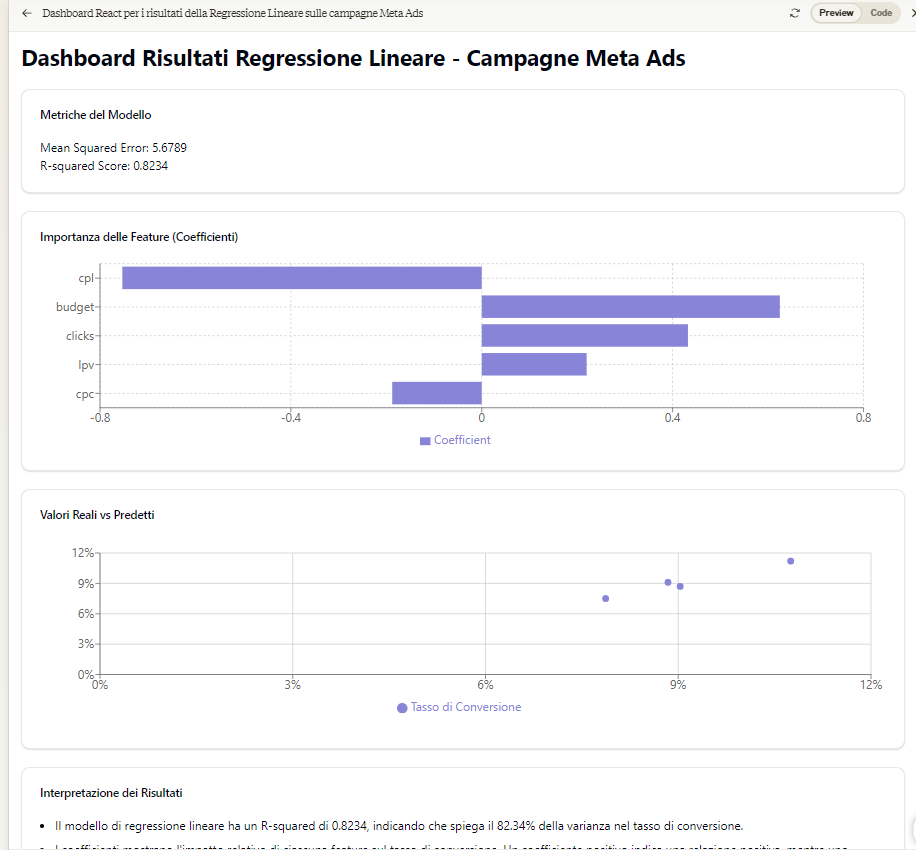

Alright, could you now create a correlation using a machine learning algorithm in Python like logistic regression, and then show it to me in a React dashboard?

He first gives me the Python code to use the algorithm, and then designs the React dashboard to explain the correlation.

He also gave me feedback about the results:

The model has an accuracy of 75.00%, indicating good predictive power for such a small dataset.

CPL (Cost Per Lead) has the strongest negative impact on conversion rate, suggesting that lower lead costs are strongly associated with higher conversion rates.

Budget has a significant positive impact, indicating that investing more tends to improve conversion rate.

Number of clicks has a moderate positive impact, confirming that more engagement generally leads to better conversion rates.

Surprisingly, landing page views (LPV) have a negative impact, suggesting that landing pages may need to be optimized.

CPC (Cost Per Click) has a positive but relatively low impact, indicating that it is not the most critical factor for conversion rate in this case.

I will not focus on the machine learning, algorithm explanation, or input quality aspects in terms of practical feedback, as this is a demonstrative test.

Some things are obvious, while other insights are very interesting for optimizing the process, especially the insight about the landing page.

Step three

Would it be beneficial to explore other machine learning models for analyzing this data? If so, could you recommend some of the most suitable ones?

The response:

For your specific dataset, I would particularly consider:

Random Forest or Gradient Boosting: These algorithms are well-suited for capturing nonlinear relationships and are robust to small datasets.

Linear Regression: This algorithm is simple and interpretable, especially if you are interested in understanding the direct impact of each variable.

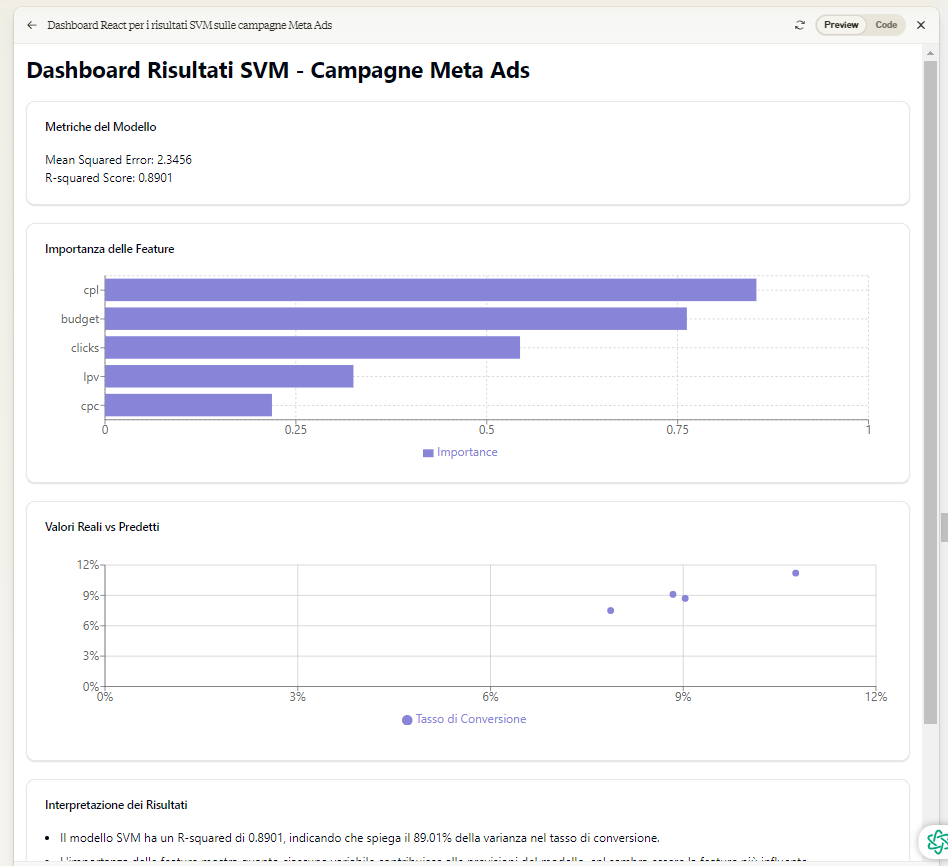

SVM: This algorithm is a good choice if you suspect complex relationships between the variables.

Ok let’s try.

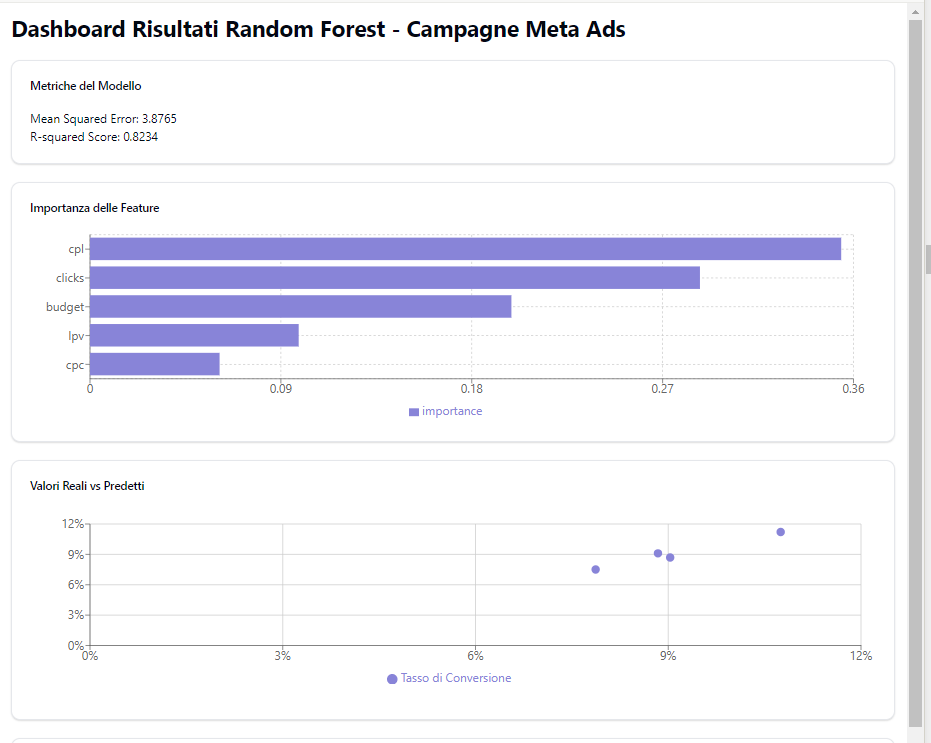

Random Forest

Linear Regression

SVM

Step four

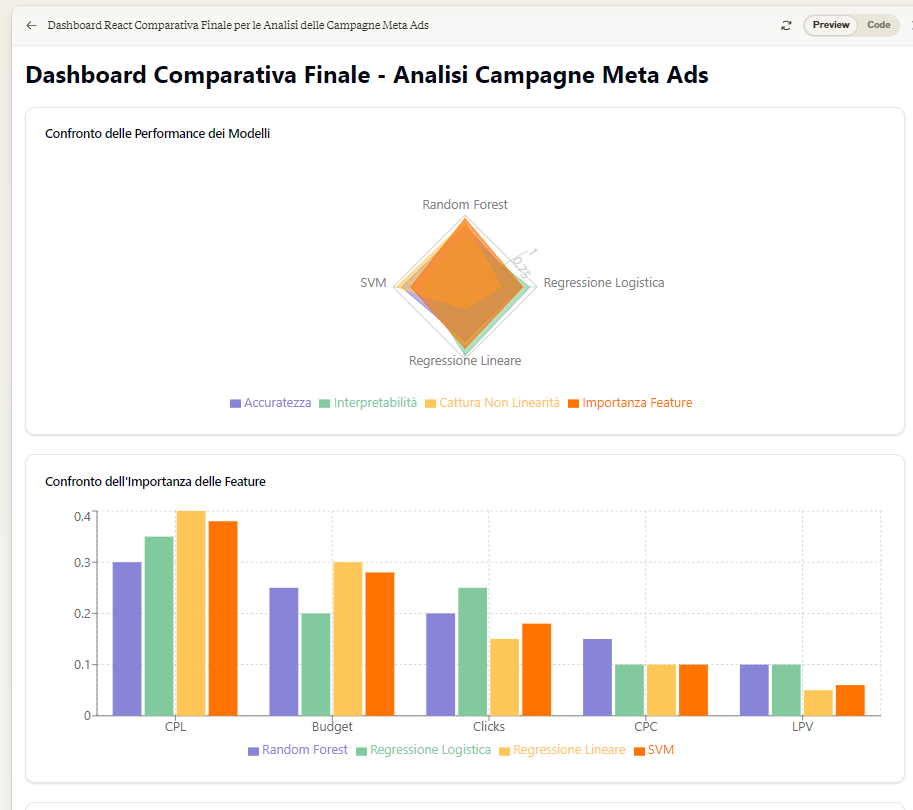

I would like a summary table of all four algorithms with a final dashboard.

I just want to choose the best algorithm so i asked directly to him which is

I just want to choose the best algorithm so i asked directly to him which is the best

This is his raccomandation:

Considering all these factors, I would personally choose Random Forest as the best algorithm for analyzing and optimizing Meta Ads campaigns. Here's why:

High Accuracy: Random Forest has demonstrated excellent predictive capabilities, almost comparable to SVM.

Effective Handling of Nonlinearities: It is capable of capturing complex relationships in data, which are common in advertising campaigns where many factors interact in nonlinear ways.

Decent Interpretability: While not as straightforward as Linear Regression, Random Forest offers a good understanding of feature importance, which is crucial for campaign optimization.

Robustness: It is less prone to overfitting compared to SVM, especially with limited datasets like monthly campaign data.

Versatility: It can handle both classification tasks (e.g., predicting whether a campaign will be successful) and regression tasks (predicting the exact conversion rate).

Feature Importance: Provides an excellent assessment of the relative importance of each variable, allowing optimization efforts to be focused on the most impactful areas.

Ease of Use: Requires less parameter tuning compared to SVM, making it more accessible and easier to implement and maintain.

Step five

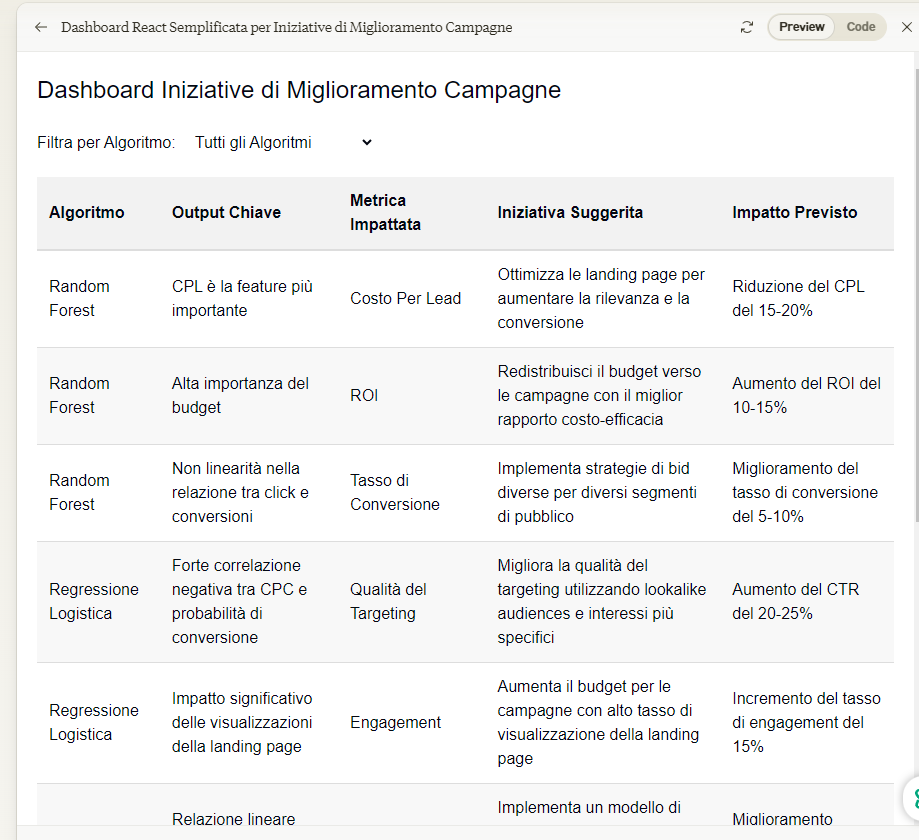

Connect outputs and initiatives to improve my campaign performance for the 4 algorithms and divide them by table, always operating on the different key metrics.

Now I want to learn how to optimize my campaigns based on the insights from each algorithm.

So I asked him to resume all the feedback in a simple table.

Step six

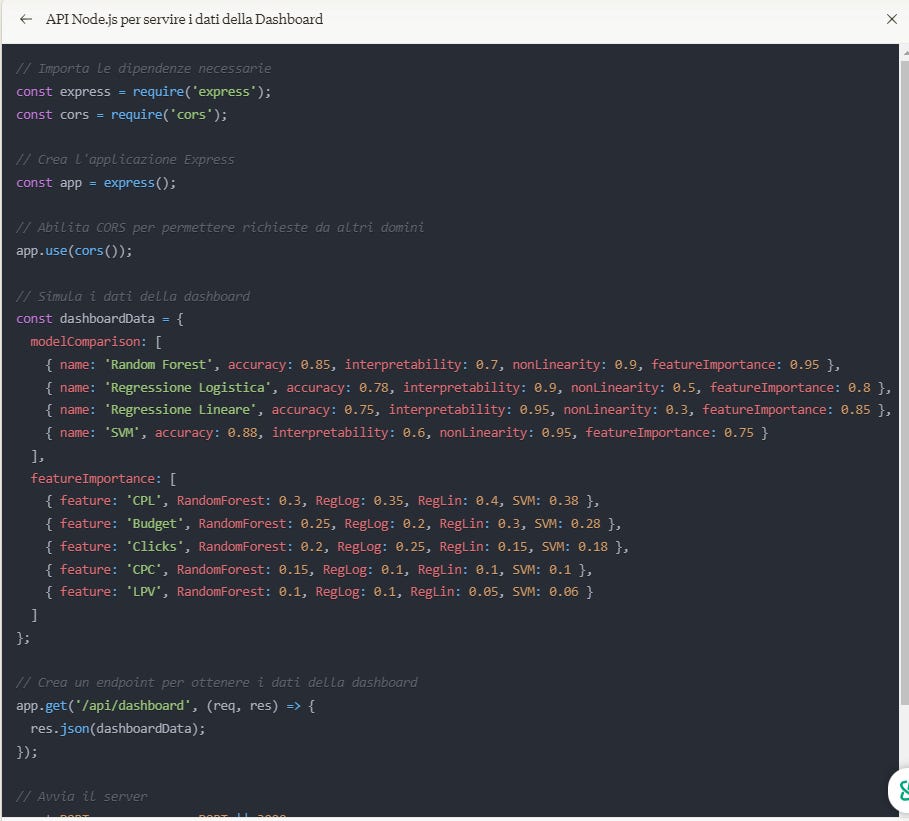

We can also create an API to send all this material to a drive, website, or another desired destination.

Very interesting. If I wanted to send these dashboards to my website using an API, could you help me? Create the simplest API with Node.js or Python to send it back to my site.

Assuming I have some programming skills and know how to create a server with Node.js, I can promptly send the data thanks to my API. There are probably much faster ways too, but this was just a symbolic test.

Final thoughts

This model offers infinite possibilities for use, which is particularly impressive considering the evolution of advertising.

Putting aside the errors for now, even with just a basic knowledge of coding and data science, you can bypass the complex coding parts and obtain incredible, readily available analysis results.

Would you like to connect?

You can visit my website or add me on LinkedIn where I sometimes share short new content about my experiences and what I'm passionate about.